I’ve started toying with animation. While it’s not a full-blown animation framework yet, it works as a solid proof of concept. The idea is simple: cycle through images over a period of time to simulate movement.

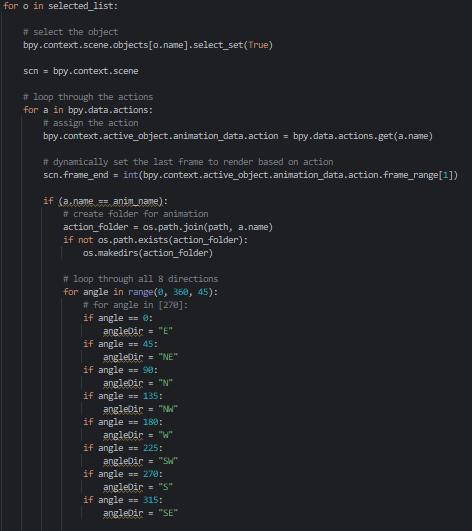

I began by rendering 20 frames of a pawn running in each of the 8 directions used in isometric movement. I used Blender to generate these animations – 20 frames per direction, across all 8 directions. Below is a short snippet from the Blender script I used.

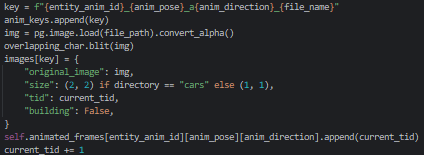

Next, image files would need to be dynamically read into the texture atlas (partial snippet):

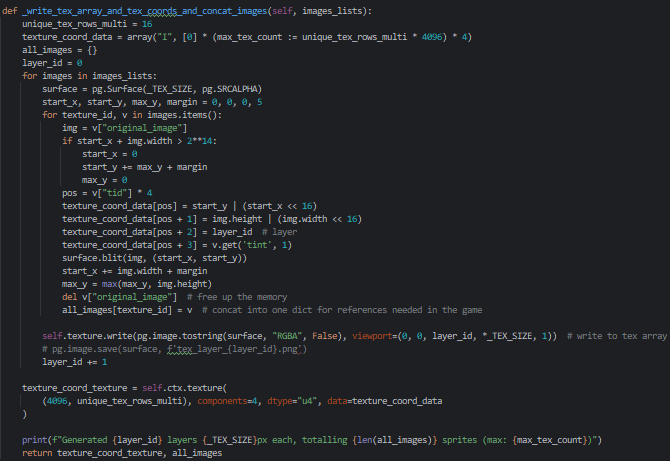

Most importantly, I’m constructing multiple large textures (atlases) where each frame is packed tightly to conserve space. This isn’t just for animations – it applies to anything that uses images, like buildings, pavement, props, etc.

By grouping related frames or sprites into shared atlases, we reduce the number of texture switches during rendering, which helps keep performance smooth even as the asset count grows.

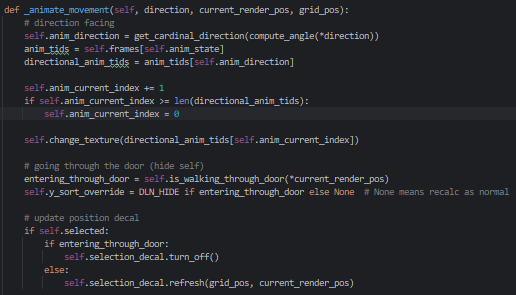

Finally, every frame, we instruct the shader to change the instance of the image to the next one

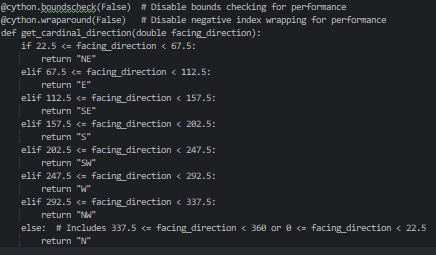

Cardinal direction is determined by a fast Cython function which returns pawn direction string which looks up the right animated image coordinates in the atlas.

Next we calibrate per-frame duration in seconds for each animation and voila

If you’re curious about the path finding part of this you can find it here.